CCA-500 Online Practice Questions and Answers

Each node in your Hadoop cluster, running YARN, has 64GB memory and 24 cores. Your yarn.site.xml

has the following configuration:

You want YARN to launch no more than 16 containers per node. What should you do?

A. Modify yarn-site.xml with the following property:

B. Modify yarn-sites.xml with the following property:

C. Modify yarn-site.xml with the following property:

D. No action is needed: YARN's dynamic resource allocation automatically optimizes the node memory and cores

You are configuring your cluster to run HDFS and MapReducer v2 (MRv2) on YARN. Which two daemons needs to be installed on your cluster's master nodes? (Choose two)

A. HMaster

B. ResourceManager

C. TaskManager

D. JobTracker

E. NameNode

F. DataNode

You are working on a project where you need to chain together MapReduce, Pig jobs. You also need the ability to use forks, decision points, and path joins. Which ecosystem project should you use to perform these actions?

A. Oozie

B. ZooKeeper

C. HBase

D. Sqoop

E. HUE

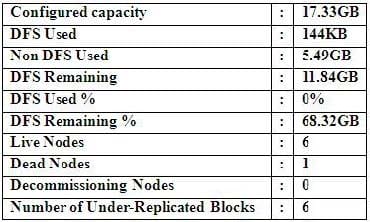

Cluster Summary:

45 files and directories, 12 blocks = 57 total. Heap size is 15.31 MB/193.38MB(7%)

Refer to the above screenshot.

You configure a Hadoop cluster with seven DataNodes and on of your monitoring UIs displays the details

shown in the exhibit.

What does the this tell you?

A. The DataNode JVM on one host is not active

B. Because your under-replicated blocks count matches the Live Nodes, one node is dead, and your DFS Used % equals 0%, you can't be certain that your cluster has all the data you've written it.

C. Your cluster has lost all HDFS data which had bocks stored on the dead DatNode

D. The HDFS cluster is in safe mode

Which two features does Kerberos security add to a Hadoop cluster? (Choose two)

A. User authentication on all remote procedure calls (RPCs)

B. Encryption for data during transfer between the Mappers and Reducers

C. Encryption for data on disk ("at rest")

D. Authentication for user access to the cluster against a central server

E. Root access to the cluster for users hdfs and mapred but non-root access for clients

You are migrating a cluster from MApReduce version 1 (MRv1) to MapReduce version 2 (MRv2) on YARN. You want to maintain your MRv1 TaskTracker slot capacities when you migrate. What should you do/

A. Configure yarn.applicationmaster.resource.memory-mb and yarn.applicationmaster.resource.cpu-vcores so that ApplicationMaster container allocations match the capacity you require.

B. You don't need to configure or balance these properties in YARN as YARN dynamically balances resource management capabilities on your cluster

C. Configure mapred.tasktracker.map.tasks.maximum and mapred.tasktracker.reduce.tasks.maximum ub yarn-site.xml to match your cluster's capacity set by the yarn-scheduler.minimum-allocation

D. Configure yarn.nodemanager.resource.memory-mb and yarn.nodemanager.resource.cpu- vcores to match the capacity you require under YARN for each NodeManager

You're upgrading a Hadoop cluster from HDFS and MapReduce version 1 (MRv1) to one running HDFS and MapReduce version 2 (MRv2) on YARN. You want to set and enforce version 1 (MRv1) to one running HDFS and MapReduce version 2 (MRv2) on YARN. You want to set and enforce a block size of 128MB for all new files written to the cluster after upgrade. What should you do?

A. You cannot enforce this, since client code can always override this value

B. Set dfs.block.size to 128M on all the worker nodes, on all client machines, and on the NameNode, and set the parameter to final

C. Set dfs.block.size to 128 M on all the worker nodes and client machines, and set the parameter to final. You do not need to set this value on the NameNode

D. Set dfs.block.size to 134217728 on all the worker nodes, on all client machines, and on the NameNode, and set the parameter to final

E. Set dfs.block.size to 134217728 on all the worker nodes and client machines, and set the parameter to final. You do not need to set this value on the NameNode

You decide to create a cluster which runs HDFS in High Availability mode with automatic failover, using Quorum Storage. What is the purpose of ZooKeeper in such a configuration?

A. It only keeps track of which NameNode is Active at any given time

B. It monitors an NFS mount point and reports if the mount point disappears

C. It both keeps track of which NameNode is Active at any given time, and manages the Edits file. Which is a log of changes to the HDFS filesystem

D. If only manages the Edits file, which is log of changes to the HDFS filesystem

E. Clients connect to ZooKeeper to determine which NameNode is Active

Your cluster implements HDFS High Availability (HA). Your two NameNodes are named nn01 and nn02. What occurs when you execute the command: hdfs haadmin failover nn01 nn02?

A. nn02 is fenced, and nn01 becomes the active NameNode

B. nn01 is fenced, and nn02 becomes the active NameNode

C. nn01 becomes the standby NameNode and nn02 becomes the active NameNode

D. nn02 becomes the standby NameNode and nn01 becomes the active NameNode

You have recently converted your Hadoop cluster from a MapReduce 1 (MRv1) architecture to MapReduce 2 (MRv2) on YARN architecture. Your developers are accustomed to specifying map and reduce tasks (resource allocation) tasks when they run jobs: A developer wants to know how specify to reduce tasks when a specific job runs. Which method should you tell that developers to implement?

A. MapReduce version 2 (MRv2) on YARN abstracts resource allocation away from the idea of "tasks" into memory and virtual cores, thus eliminating the need for a developer to specify the number of reduce tasks, and indeed preventing the developer from specifying the number of reduce tasks.

B. In YARN, resource allocations is a function of megabytes of memory in multiples of 1024mb. Thus, they should specify the amount of memory resource they need by executing D mapreducereduces.memory-mb-2048

C. In YARN, the ApplicationMaster is responsible for requesting the resource required for a specific launch. Thus, executing D yarn.applicationmaster.reduce.tasks=2 will specify that the ApplicationMaster launch two task contains on the worker nodes.

D. Developers specify reduce tasks in the exact same way for both MapReduce version 1 (MRv1) and MapReduce version 2 (MRv2) on YARN. Thus, executing D mapreduce.job.reduces-2 will specify reduce tasks.

E. In YARN, resource allocation is function of virtual cores specified by the ApplicationManager making requests to the NodeManager where a reduce task is handeled by a single container (and thus a single virtual core). Thus, the developer needs to specify the number of virtual cores to the NodeManager by executing p yarn.nodemanager.cpu-vcores=2